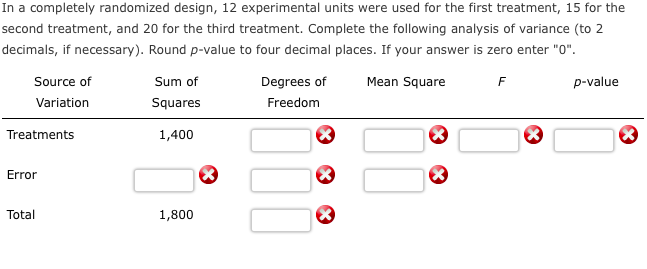

Thus the number of degrees of freedom of treatment is 4 – 1 = 3. The number of degrees of freedom of error is 12 – 4 = 8. Mean Squares We now divide our sum of squares by the appropriate number of degrees of freedom in order to obtain the mean squares. The mean square for treatment is 30 / 3 = 10.

...

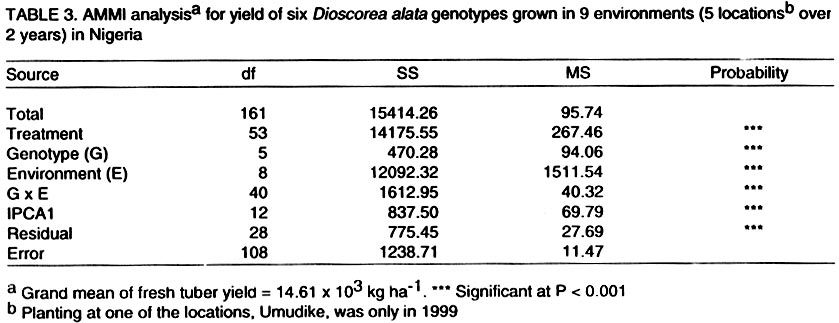

The ANOVA Procedure.

How do you calculate degrees of freedom of treatment and error?

Thus the number of degrees of freedom of treatment is 4 – 1 = 3. The number of degrees of freedom of error is 12 – 4 = 8. We now divide our sum of squares by the appropriate number of degrees of freedom in order to obtain the mean squares.

What are the degrees of freedom associated with the sum-of-squares?

The degrees of freedom associated with a sum-of-squares is the degrees-of-freedom of the corresponding component vectors. The three-population example above is an example of one-way Analysis of Variance. The model, or treatment, sum-of-squares is the squared length of the second vector,

How do you find the mean square for degrees of freedom?

We now divide our sum of squares by the appropriate number of degrees of freedom in order to obtain the mean squares. The mean square for treatment is 30 / 3 = 10. The mean square for error is 48 / 8 = 6.

What is the error degrees of freedom from N-m?

That is, the error degrees of freedom is 14−2 = 12. Alternatively, we can calculate the error degrees of freedom directly from n − m = 15−3=12.

What are the degrees of freedom for the sum of squares for error?

The error degrees of freedom is equal to the total number of observations minus 2. In this example, it is 5 - 2 = 3. The total degrees of freedom is the total number of observations minus 1....Yyy21.00-1.061.12362.00-0.060.00361.30-0.760.57763.751.692.85611 more row

How do you find the degrees of freedom for treatment sum of squares?

The Mean Sum of Squares between the groups, denoted MSB, is calculated by dividing the Sum of Squares between the groups by the between group degrees of freedom. That is, MSB = SS(Between)/(m−1).

What is the degrees of freedom for error?

and the degrees of freedom for error are DFE = N - k \, . MSE = SSE / DFE . The test statistic, used in testing the equality of treatment means is: F = MST / MSE. The critical value is the tabular value of the F distribution, based on the chosen \alpha level and the degrees of freedom DFT and DFE.

What is the treatment sum of squares?

The treatment sum of squares is the variation attributed to, or in this case between, the laundry detergents. The sum of squares of the residual error is the variation attributed to the error.

How do you calculate degrees of freedom?

To calculate degrees of freedom, subtract the number of relations from the number of observations. For determining the degrees of freedom for a sample mean or average, you need to subtract one (1) from the number of observations, n.

How do you find df within?

Step 4) calculate the degrees of freedom within using the following formula: The degrees of freedom within groups is equal to N - k, or the total number of observations (9) minus the number of groups (3).

How do you find the sum of squares with df?

“df” is the total degrees of freedom. To calculate this, subtract the number of groups from the overall number of individuals. SSwithin is the sum of squares within groups. The formula is: degrees of freedom for each individual group (n-1) * squared standard deviation for each group.

What is the degree of freedom in statistics?

Degrees of freedom refers to the maximum number of logically independent values, which are values that have the freedom to vary, in the data sample. Degrees of freedom are commonly discussed in relation to various forms of hypothesis testing in statistics, such as a chi-square.

How many degrees of freedom are there in regression?

two degrees of freedomDegrees of Freedom for a Linear Regression Model This linear regression model has two degrees of freedom because there are two parameters in the model that must be estimated from a training dataset. Adding one more column to the data (one more input variable) would add one more degree of freedom for the model.

What is degree of freedom in ANOVA?

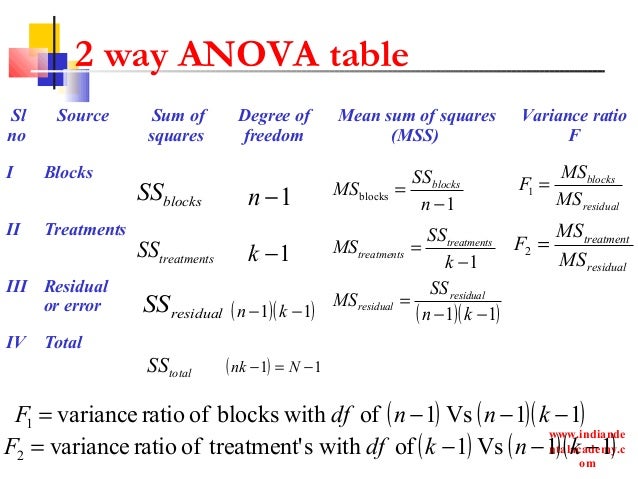

The degrees of freedom (DF) are the number of independent pieces of information. In ANOVA analysis once the Sum of Squares (e.g., SStr, SSE) are calculated, they are divided by corresponding DF to get Mean Squares (e.g. MStr, MSE), which are the variance of the corresponding quantity.

What is DF total?

The degrees of freedom is equal to the sum of the individual degrees of freedom for each sample. Since each sample has degrees of freedom equal to one less than their sample sizes, and there are k samples, the total degrees of freedom is k less than the total sample size: df = N - k.

How is SSE calculated in ANOVA table?

Here we utilize the property that the treatment sum of squares plus the error sum of squares equals the total sum of squares. Hence, SSE = SS(Total) - SST = 45.349 - 27.897 = 17.45 \, . STEP 5 Compute MST, MSE, and their ratio, F. where N is the total number of observations and k is the number of treatments.

What is the degree of freedom associated with a sum of squares?

The degrees of freedom associated with a sum-of-squares is the degrees-of-freedom of the corresponding component vectors.

What is the number of degrees of freedom?

In statistics, the number of degrees of freedom is the number of values in the final calculation of a statistic that are free to vary. The number of independent ways by which a dynamic system can move, without violating any constraint imposed on it, is called number of degrees of freedom. In other words, the number of degrees ...

What is the degree of freedom of a vector?

Mathematically, degrees of freedom is the number of dimensions of the domain of a random vector, or essentially the number of "free" components (how many components need to be known before the vector is fully determined).

What is the definition of degree of freedom?

The term is most often used in the context of linear models ( linear regression, analysis of variance ), where certain random vectors are constrained to lie in linear subspaces, and the number of degrees of freedom is the dimension of the subspace.

Who first used degrees of freedom?

Although the basic concept of degrees of freedom was recognized as early as 1821 in the work of German astronomer and mathematician Carl Friedrich Gauss, its modern definition and usage was first elaborated by English statistician William Sealy Gosset in his 1908 Biometrika article "The Probable Error of a Mean", published under the pen name "Student". While Gosset did not actually use the term 'degrees of freedom', he explained the concept in the course of developing what became known as Student's t-distribution. The term itself was popularized by English statistician and biologist Ronald Fisher, beginning with his 1922 work on chi squares.

What does error mean in statistics?

Error means "the variability within the groups" or "unexplained random error.". Sometimes, the row heading is labeled as Within to make it clear that the row concerns the variation within the groups. Total means "the total variation in the data from the grand mean" (that is, ignoring the factor of interest).

What does DF mean in learning?

In the learning study, the factor is the learning method. DF means "the degrees of freedom in the source.". SS means "the sum of squares due to the source.". MS means "the mean sum of squares due to the source.".

What does factor mean in math?

P means "the P -value.". Now, let's consider the row headings: Factor means "the variability due to the factor of interest.". In the tire example on the previous page, the factor was the brand of the tire. In the learning example on the previous page, the factor was the method of learning.

What does error mean in statistics?

Error means "the variability within the groups" or "unexplained random error.". Sometimes, the row heading is labeled as Within to make it clear that the row concerns the variation within the groups. Total means "the total variation in the data from the grand mean" (that is, ignoring the factor of interest).

What does DF mean in learning?

In the learning study, the factor is the learning method. DF means "the degrees of freedom in the source.". SS means "the sum of squares due to the source.". MS means "the mean sum of squares due to the source.". F means "the F -statistic.".

What does factor mean in math?

Factor means "the variability due to the factor of interest.". In the tire example on the previous page, the factor was the brand of the tire. In the learning example on the previous page, the factor was the method of learning.

Data and Sample Means

Suppose we have four independent populations that satisfy the conditions for single factor ANOVA. We wish to test the null hypothesis H0: μ 1 = μ 2 = μ 3 = μ 4. For purposes of this example, we will use a sample of size three from each of the populations being studied. The data from our samples is:

Sum of Squares of Error

We now calculate the sum of the squared deviations from each sample mean. This is called the sum of squares of error.

Sum of Squares of Treatment

Now we calculate the sum of squares of treatment. Here we look at the squared deviations of each sample mean from the overall mean, and multiply this number by one less than the number of populations:

Degrees of Freedom

Before proceeding to the next step, we need the degrees of freedom. There are 12 data values and four samples. Thus the number of degrees of freedom of treatment is 4 – 1 = 3. The number of degrees of freedom of error is 12 – 4 = 8.

Mean Squares

We now divide our sum of squares by the appropriate number of degrees of freedom in order to obtain the mean squares.

The F-statistic

The final step of this is to divide the mean square for treatment by the mean square for error. This is the F-statistic from the data. Thus for our example F = 10/6 = 5/3 = 1.667.

Overview

In analysis of variance (ANOVA)

In statistical testing problems, one usually is not interested in the component vectors themselves, but rather in their squared lengths, or Sum of Squares. The degrees of freedom associated with a sum-of-squares is the degrees-of-freedom of the corresponding component vectors.

The three-population example above is an example of one-way Analysis of Variance. The model, or treatment, sum-of-squares is the squared length of the second vector,

History

Although the basic concept of degrees of freedom was recognized as early as 1821 in the work of German astronomer and mathematician Carl Friedrich Gauss, its modern definition and usage was first elaborated by English statistician William Sealy Gosset in his 1908 Biometrika article "The Probable Error of a Mean", published under the pen name "Student". While Gosset did not actually use the term 'degrees of freedom', he explained the concept in the course of developing what became known as Student's t-distribution. The term itself was populari…

Notation

In equations, the typical symbol for degrees of freedom is ν (lowercase Greek letter nu). In text and tables, the abbreviation "d.f." is commonly used. R. A. Fisher used n to symbolize degrees of freedom but modern usage typically reserves n for sample size.

Of random vectors

Geometrically, the degrees of freedom can be interpreted as the dimension of certain vector subspaces. As a starting point, suppose that we have a sample of independent normally distributed observations,

This can be represented as an n-dimensional random vector:

Since this random vector can lie anywhere in n-dimensional space, it has n degrees of freedom.

Now, let be the sample mean. The random vector can be decomposed as the sum of the sample mean plus a vec…

In structural equation models

When the results of structural equation models (SEM) are presented, they generally include one or more indices of overall model fit, the most common of which is a χ statistic. This forms the basis for other indices that are commonly reported. Although it is these other statistics that are most commonly interpreted, the degrees of freedom of the χ are essential to understanding model fit as well as the nature of the model itself.

Degrees of freedom in SEM are computed as a difference between the number of unique pieces of information t…

In linear models

The demonstration of the t and chi-squared distributions for one-sample problems above is the simplest example where degrees-of-freedom arise. However, similar geometry and vector decompositions underlie much of the theory of linear models, including linear regression and analysis of variance. An explicit example based on comparison of three means is presented here; the geometry of linear models is discussed in more complete detail by Christensen (2002).

In probability distributions

Several commonly encountered statistical distributions (Student's t, chi-squared, F) have parameters that are commonly referred to as degrees of freedom. This terminology simply reflects that in many applications where these distributions occur, the parameter corresponds to the degrees of freedom of an underlying random vector, as in the preceding ANOVA example. Another simple example is: if are independent normal random variables, the statistic