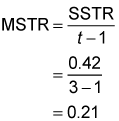

In ANOVA, mean squares are used to determine whether factors (treatments) are significant. The treatment mean square is obtained by dividing the treatment sum of squares by the degrees of freedom. The treatment mean square represents the variation between the sample means.

- The treatment mean square is obtained by dividing the treatment sum of squares by the degrees of freedom. The treatment mean square represents the variation between the sample means.

- The mean square of the error (MSE) is obtained by dividing the sum of squares of the residual error by the degrees of freedom.

How do you find the mean of a treatment in ANOVA?

In ANOVA, mean squares are used to determine whether factors (treatments) are significant. The treatment mean square is obtained by dividing the treatment sum of squares by the degrees of freedom. The mean square of the error (MSE) is obtained by dividing the sum of squares of the residual error by the degrees of freedom.

How to calculate the mean square of error in ANOVA?

ANOVA 1 The treatment mean square is obtained by dividing the treatment sum of squares by the degrees of freedom. The treatment... 2 The mean square of the error (MSE) is obtained by dividing the sum of squares of the residual error by the degrees of... More ...

How do you calculate the sum of squares of treatment?

Calculate the sum of squares of treatment. We square the deviation of each sample mean from the overall mean. The sum of all of these squared deviations is multiplied by one less than the number of samples we have. This number is the sum of squares of treatment, abbreviated SST.

How do you construct the test statistic for ANOVA?

Compared with other types of hypothesis tests, constructing the test statistic for ANOVA is quite complex. You construct the test statistic (or F-statistic) from the error mean square (MSE) and the treatment mean square (MSTR).

How do you find mean square in ANOVA?

Mean squares Each mean square value is computed by dividing a sum-of-squares value by the corresponding degrees of freedom. In other words, for each row in the ANOVA table divide the SS value by the df value to compute the MS value.

What is SSE in ANOVA table?

The abbreviations SSE, SSerror, SSError, SSE and SS(W ithin) are synonymous for “error sum of squares”. Associated with each sum of squares is its degrees of freedom. The total degrees of freedom is n−1.

How do I find my MSE?

To calculate MSE by hand, follow these instructions:Compute differences between the observed values and the predictions.Square each of these differences.Add all these squared differences together.Divide this sum by the sample length.That's it, you've found the MSE of your data!

What is the formula of mean square?

Usage. Mean square between is used to calculate the F ratio (sometimes called the F-value): F Ratio = MSB/MSE.

How do you calculate SSE and SST?

We can verify that SST = SSR + SSE: SST = SSR + SSE....Sum of Squares Error (SSE): 331.0749R-squared = SSR / SST.R-squared = 917.4751 / 1248.55.R-squared = 0.7348.

How is SSE and SSG calculated?

3:047:45SSG, SSE, and SST - YouTubeYouTubeStart of suggested clipEnd of suggested clipAnd x bar 1 minus x bar because there's another data point in that first group and then x bar 1MoreAnd x bar 1 minus x bar because there's another data point in that first group and then x bar 1 minus x bar because there was a third data point in that other group.

How do you calculate MSE in Excel?

To calculate MSE in Excel, we can perform the following steps:Step 1: Enter the actual values and forecasted values in two separate columns. What is this? ... Step 2: Calculate the squared error for each row. Recall that the squared error is calculated as: (actual – forecast)2. ... Step 3: Calculate the mean squared error.

How do you calculate MSR and MSE?

significance testing. The mean square due to regression, denoted MSR, is computed by dividing SSR by a number referred to as its degrees of freedom; in a similar manner, the mean square due to error, MSE, is computed by dividing SSE by its degrees of freedom.

How do you calculate SSE from MSE?

MSE = [1/n] SSE. This formula enables you to evaluate small holdout samples.

How do you find the mean square example?

0:343:04Mean Squared Error MSE - YouTubeYouTubeStart of suggested clipEnd of suggested clipHere's our msc formula. It's 1 over n where n is the number of items in the sample. Multiplied byMoreHere's our msc formula. It's 1 over n where n is the number of items in the sample. Multiplied by sigma that just means to add everything up and we're going to find the differences.

How do you calculate the mean squared within value?

What is Within Mean Square?“df” is the total degrees of freedom. To calculate this, subtract the number of groups from the overall number of individuals.SSwithin is the sum of squares within groups. The formula is: degrees of freedom for each individual group (n-1) * squared standard deviation for each group.

What is a mean square in statistics?

In general, the mean square of a set of values is the arithmetic mean of the squares of their differences from some given value, namely their second moment about that value.

Data and Sample Means

Suppose we have four independent populations that satisfy the conditions for single factor ANOVA. We wish to test the null hypothesis H0: μ 1 = μ 2 = μ 3 = μ 4. For purposes of this example, we will use a sample of size three from each of the populations being studied. The data from our samples is:

Sum of Squares of Error

We now calculate the sum of the squared deviations from each sample mean. This is called the sum of squares of error.

Sum of Squares of Treatment

Now we calculate the sum of squares of treatment. Here we look at the squared deviations of each sample mean from the overall mean, and multiply this number by one less than the number of populations:

Degrees of Freedom

Before proceeding to the next step, we need the degrees of freedom. There are 12 data values and four samples. Thus the number of degrees of freedom of treatment is 4 – 1 = 3. The number of degrees of freedom of error is 12 – 4 = 8.

Mean Squares

We now divide our sum of squares by the appropriate number of degrees of freedom in order to obtain the mean squares.

The F-statistic

The final step of this is to divide the mean square for treatment by the mean square for error. This is the F-statistic from the data. Thus for our example F = 10/6 = 5/3 = 1.667.

How to find mean squares?

Mean squares represent an estimate of population variance. It is calculated by dividing the corresponding sum of squares by the degrees of freedom.

Why does Minitab have negative estimates?

Minitab, however, displays the negative estimates because they sometimes indicate that the model being fit is inappropriate for the data. Variance components are not estimated for fixed terms.

What is the MSE in regression?

The MSE is the variance (s 2) around the fitted regression line. Dividing the MS (term) by the MSE gives F, which follows the F-distribution with degrees of freedom for the term and degrees of freedom for error.

How many observations are there in a laundry detergent experiment?

For example, you do an experiment to test the effectiveness of three laundry detergents. You collect 20 observations for each detergent. The variation in means between Detergent 1, Detergent 2, and Detergent 3 is represented by the treatment mean square.

Does adjusted sum of squares depend on the order of the factors?

The adjusted sum of squares does not depend on the order the factors are entered into the model. It is the unique portion of SS Regression explained by a factor, assuming all other factors in the model, regardless of the order they were entered into the model.

What is the p-value of 0.071?

This provides a permutation-based p-value of 0.071 and suggests marginal evidence against the null hypothesis of no difference in the true means. We would interpret this as saying that there is a 7.1% chance of getting a SS A as large or larger than we observed, given that the null hypothesis is true.

Is SS A positive or negative?

In contrast to our previous test statistics where positive and negative differences were possible, SS A is always positive with a value of 0 corresponding to no variation in the means. The larger the SS A, the more variation there was in the means.

Can total variation change?

In a permutation situation , the total variation (SS Total) cannot change - it is the same responses varying around the grand mean. However, the amount of variation attributed to variation among the means and in the residuals can change if we change which observations go with which group.

How is Anova test performed?

The Anova test is performed by comparing two types of variation, the variation between the sample means, as well as the variation within each of the samples. The below mentioned formula represents one-way Anova test statistics: Alternatively,

What is the purpose of ANOVA?

Analysis of variance, or ANOVA, is a strong statistical technique that is used to show the difference between two or more means or components through significance tests. It also shows us a way to make multiple comparisons of several populations means.

How to find the mean square?

The " Mean square " is calculated by dividing the sum of squares by the degrees of freedom for that source. The mean square is analogous to the variance (i.e. the square of the standard deviation) of a distribution. Thus a large mean square represents a large variance, and vice versa.

What is an ANOVA test?

An ANOVA tests the null hypothesis that there is no difference among the mean values for the different treatment groups. Although it is possible to conduct an ANOVA by hand, no one in their right mind having access to computer software would do so. Setting up an ANOVA using RStudio is quite easy.

What is the purpose of ANOVA?

The fundamental principle in ANOVA is to determine how many times greater the variability due to the treatment is than the variability that we cannot explain.

What are differences caused by experimental treatment?

Differences caused by an experimental treatment can be thought of as just one part of the overall variability of measurements that originates from many sources. If we measured the strength of the response of cockroach retinas when stimulated by light, we would get a range of measurements. Some of the variability in measurements could be due to ...

Data and Sample Means

Sum of Squares of Error

- We now calculate the sum of the squared deviations from each sample mean. This is called the sum of squares of error. 1. For the sample from population #1: (12 – 11)2 + (9– 11)2 +(12 – 11)2= 6 2. For the sample from population #2: (7 – 10)2 + (10– 10)2 +(13 – 10)2= 18 3. For the sample from population #3: (5 – 8)2 + (8 – 8)2 +(11 – 8)2= 18 4. For the sample from populatio…

Sum of Squares of Treatment

- Now we calculate the sum of squares of treatment. Here we look at the squared deviations of each sample mean from the overall mean, and multiply this number by one less than the number of populations: 3[(11 – 9)2 + (10 – 9)2 +(8 – 9)2 + (7 – 9)2] = 3[4 + 1 + 1 + 4] = 30.

Degrees of Freedom

- Before proceeding to the next step, we need the degrees of freedom. There are 12 data values and four samples. Thus the number of degrees of freedom of treatment is 4 – 1 = 3. The number of degrees of freedom of error is 12 – 4 = 8.

Mean Squares

- We now divide our sum of squares by the appropriate number of degrees of freedom in order to obtain the mean squares. 1. The mean square for treatment is 30 / 3 = 10. 2. The mean square for error is 48 / 8 = 6.

The F-Statistic

- The final step of this is to divide the mean square for treatment by the mean square for error. This is the F-statistic from the data. Thus for our example F = 10/6 = 5/3 = 1.667. Tables of values or software can be used to determine how likely it is to obtain a value of the F-statistic as extreme as this value by chance alone.